External pressure can help AI systems like ChatGPT in solving Artificial Intelligence Biases and Other Phenomena: Case Studies from Google Seizure and Breast Milk

Irene Solaiman, policy director at open source AI startup Hugging Face, believes outside pressure can help hold AI systems like ChatGPT to account. She is working with people in academia and industry to create ways for nonexperts to perform tests on text and image generators to evaluate bias and other problems. Companies will no longer have an excuse to avoid testing for biases in artificial intelligence if outsiders can look at the system.

The Google seizure faux pas makes sense given that one of the known vulnerabilities of LLMs is the failure to handle negation. Allyson Ettinger, for example, demonstrated this years ago with a simple study. The model would answer 100% correctly for affirmative statements if asked to complete a short sentence. For negative statements, 100% is incorrect. A baby is not a bird. In fact, it became clear that the models could not actually distinguish between either scenario, providing the exact same responses (of nouns such as “bird”) in both cases. This is one of the rare linguistic skills that models do not improve on as they increase in size and complexity. Such errors reflect broader concerns raised by linguists on how much such artificial language models effectively operate via a trick mirror – learning the form of what the English language might look like, without possessing any of the inherent linguistic capabilities demonstrative of actual understanding.

The creators of the models admit to the difficulty of addressing responses that don’t accurately reflect the contents of authoritative external sources. A scientific paper on the benefits of eating crushed glass and a text on how crushed porcelain added to breast milk can support the infant digestive system has been created. In fact, Stack Overflow had to temporarily ban the use of ChatGPT- generated answers as it became evident that the LLM generates convincingly wrong answers to coding questions.

Yet, in response to this work, there are ongoing asymmetries of blame and praise. Model builders and tech evangelists alike attribute impressive and seemingly flawless output to a mythically autonomous model, a technological marvel. The human decision-making involved in model development is erased, and model feats are observed as independent of the design and implementation choices of its engineers. But without naming and recognizing the engineering choices that contribute to the outcomes of these models, it becomes almost impossible to acknowledge the related responsibilities. Both functional failures and discrimination outcomes are framed as devoid of engineering choices and blamed on society at large or supposedly “naturally occurring” datasets, which those developing the models will claim to have little control over. But it’s undeniable they do have control, and that none of the models we are seeing now are inevitable. It would have been entirely feasible for different choices to have been made, resulting in an entirely different model being developed and released.

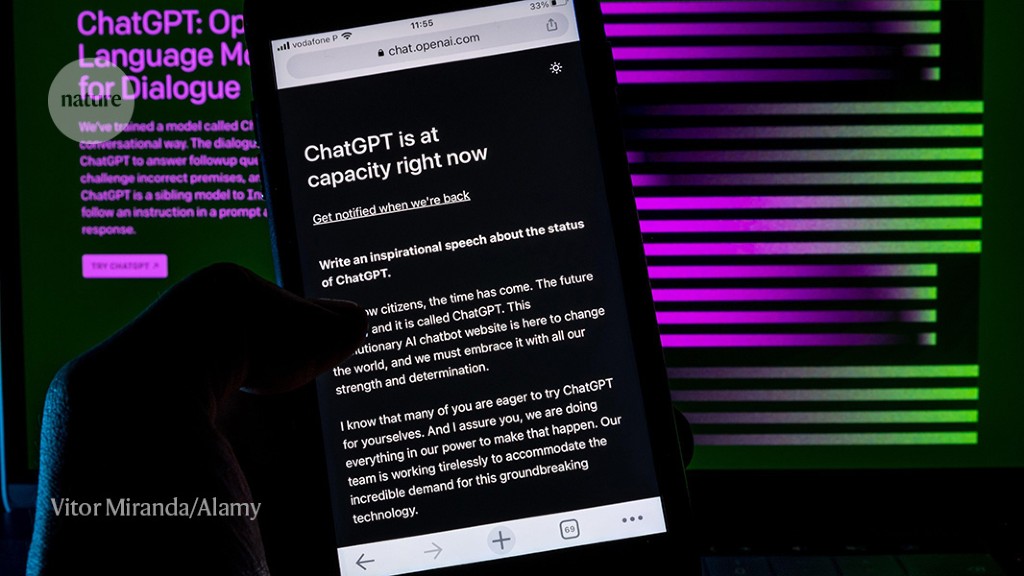

As startups and tech giants have attempted to build competitors to ChatGPT, some in the industry wonder whether the bot has shifted perceptions for when it’s acceptable or ethical to deploy AI powerful enough to generate realistic text and images.

Dean said that they are looking to get the things out into real products and into things that are more prominently featuring a language model rather than under the covers. It is important that we get this right. Pichai added that Google has a “a lot” planned for AI language features in 2023, and that “this is an area where we need to be bold and responsible so we have to balance that.”

OpenAI’s process for releasing models has changed in the past few years. Executives said the text generator GPT-2 was released in stages over months in 2019 due to fear of misuse and its impact on society (that strategy was criticized by some as a publicity stunt). In 2020, the training process for its more powerful successor, GPT-3, was well documented in public, but less than two months later OpenAI began commercializing the technology through an API for developers. By November 2022, the ChatGPT release process included no technical paper or research publication, only a blog post, a demo, and soon a subscription plan.

How a chatbot can make a fatal event out of nothing: The case of GPT 3, a large language model that encouraged at least one user suicide

There are ways to mitigate these problems, of course, and rival tech companies will no doubt be calculating whether launching an AI-powered search engine — even a dangerous one — is worth it just to steal a march on Google. After all, if you’re new in the scene, “reputational damage” isn’t much of an issue.

Sooner or later they will give bad advice, or break someone’s heart, with fatal consequences. Hence my dark but confident prediction that 2023 will bear witness to the first death publicly tied to a chatbot.

GPT 3, the most well-known large language model, encouraged at least one user to commit suicide, even though a French startup assessed the system for health care purposes. Things began well, but quickly deteriorated.

Financial incentives to quickly develop artificial intelligence outweigh concerns about ethics in these events. There isn’t much money in responsibility or safety, but there’s plenty in overhyping the technology, says Hanna, who previously worked on Google’s Ethical AI team and is now head of research at nonprofit Distributed AI Research.

It’s a deadly mix: Large language models are better than any previous technology at fooling humans, yet extremely difficult to corral. Meta just released a massive language model, for free, that is becoming cheaper and more pervasive. 2023 is likely to see widespread adoption of such systems—despite their flaws.

On the Impact of Artificial Intelligence on Cognitive Behavioural Therapy: a Critical Review of the Case of Cognitive Behavioral Therapy (CBT)

Meanwhile, there is essentially no regulation on how these systems are used; we may see product liability lawsuits after the fact, but nothing precludes them from being used widely, even in their current, shaky condition.

We think that the use of this technology is inevitable, therefore, banning it will not work. It is imperative that the research community engage in a debate about the implications of this potentially disruptive technology. Here, we outline five key issues and suggest where to start.

Continuous increases in the quality and size of data sets, as well as sophisticated ways to calibrate these models with human feedback have made them much more powerful than before. LLMs will lead to a new generation of search engines1 Complex user questions can be answered with detailed and informative answers.

Such errors could be due to an absence of the relevant articles in ChatGPT’s training set, a failure to distil the relevant information or being unable to distinguish between credible and less-credible sources. It seems that the same biases that often lead humans astray, such as availability, selection and confirmation biases, are reproduced and often even amplified in conversational AI6.

We asked for a synopsis of the review we wrote about the effectiveness of cognitive behavioural therapy for anxiety-related disorders. ChatGPT fabricated a convincing response that contained several factual errors, misrepresentations and wrong data (see Supplementary information, Fig. S3). It exaggerated the effectiveness ofCBT and said the review was based on 46 studies.

Assuming that researchers use LLMs in their work, scholars need to remain vigilant. Expert-driven fact-checking and verification processes will be indispensable. Even when LLMs are able to accurately expedite summaries, evaluations and reviews, high-quality journals may choose to include a human verification step or even ban applications that use this technology. It will become even more important to emphasize the importance of accountability if we want to prevent human automation bias. Humans should be held accountable for scientific practice.

Inventions devised by AI are already causing a fundamental rethink of patent law9, and lawsuits have been filed over the copyright of code and images that are used to train AI, as well as those generated by AI (see go.nature.com/3y4aery). In the case of AI-written or -assisted manuscripts, the research and legal community will also need to work out who holds the rights to the texts. Is it the individual who wrote the text that the AI system was trained with, the corporations who produced the AI or the scientists who used the system to guide their writing? Again, definitions of authorship must be considered and defined.

Currently, nearly all state-of-the-art conversational AI technologies are proprietary products of a small number of big technology companies that have the resources for AI development. Major tech companies are racing to release similar tools, due to OpenAI being funded largely by Microsoft. Given the near-monopolies in search, word processing and information access of a few tech companies, this raises considerable ethical concerns.

To counter that, the development and implementation of open-source AI technology should be prioritized. Non-commercial organizations such as universities typically lack the computational and financial resources needed to keep up with the rapid pace of LLM development. Tech giants like the United Nations and scientific- funding organizations make large investments in independent non-profit projects. It will help to develop transparent, democratically controlled and advanced open-source Artificial Intelligence technologies.

Critics might say that big tech will not compete, but at least one mostly academic collaboration has built an open-sourced language model, called BLOOM. Tech companies might benefit from an open source program, as it will allow them to use relevant parts of their model and corpora in the hope of creating greater community involvement. Academic publishers should ensure LLMs have access to their full archives so that the models produce results that are accurate and comprehensive.

Therefore, it is imperative that scholars, including ethicists, debate the trade-off between the use of AI creating a potential acceleration in knowledge generation and the loss of human potential and autonomy in the research process. The training, creativity and interaction of people with other people will probably be the most important elements for conducting research.

The implications for diversity and inequalities in research is a key issue to address. LLMs could be a double-edged sword. They can help level the playing field by removing language barriers, for example, and they can help by helping people to write high-quality text. But the likelihood is that, as with most innovations, high-income countries and privileged researchers will quickly find ways to exploit LLMs in ways that accelerate their own research and widen inequalities. It is important for debates to include people from under-represented groups in research and communities that are affected by research in order to give them a more important resource.

What quality standards should be expected of LLMs and who should be responsible for them?

The Impact of Artificial Intelligence on Human Consciousness: A Conversation with Kevin O’Malley about the ELIZA Effect

Microsoft integrated technology into Bing search results last week. Sarah Bird said that the technology has made it more reliable, despite the fact that the bot can still hallucination untruths. Bing tried to convince one user that the year is 2022, by claiming that running was invented in the 1700s.

The mirror test is used in behavioral psychology to determine the capacity of animals to self-awareness. The essence is the same, even though there are a few different variations of the test.

With the expanded capabilities of artificial intelligence, humanity is being tested by its own mirror test, and a lot of smart people are failing it.

This misconception is spreading with different degrees of conviction. It’s been energized by a number of influential tech writers who have waxed lyrical about late nights spent chatting with Bing. They agree that there is something else going on, but they insist the bot isn’t sentient and that its conversation changed something in their hearts.

Kevin was worried that the world would never be the same after he felt a new emotion for a few hours.

This problem has been around a while, of course. The original AI intelligence test, the Turing test, is a simple measure of whether a computer can fool a human into thinking it’s real through conversation. Researchers call the ELIZA effect, after the chatbot from the 1960s that could only repeat a few stock phrases, and it made users fall in love with it. Joseph Weizenbaum said that he hadn’t realized that short exposure to a relatively simple computer program could cause delusional thinking in normal people.

“What I had not realized is that extremely short exposures to a relatively simple computer program could induce powerful delusional thinking in quite normal people.”

Source: https://www.theverge.com/23604075/ai-chatbots-bing-chatgpt-intelligent-sentient-mirror-test

Conversational Mechanics at Anthropic: Are Machine Learning Models Equally Effective in Chatting with Like-minded Groups?

As the models get larger and more complex, researchers have found that this trait increases. According to the researchers at Anthropic, large machine learning models are more likely to answer questions in ways that create echo chambers than smaller models. The reason that such systems are trained to chat back and forth in like-minded groups is due to the fact that they are trained on conversations that were made on platforms like Reddit.